How LLMs and MultiModal AI are Reshaping Recommendation & Search

Beyond the search bar, how Generative AI is rewriting how we find and discover

Remember when searching online meant typing a few keywords and hoping for the best? It was a bit like playing a guessing game with a giant library. Well, that's really changing.

In todays edition of Where's The Future in Tech, we're diving into how MultiModal AI & Large Language Models (LLMs) - the powerful AI behind tools like ChatGPT and Google's Gemini are shaping this shift.

Traditional Search & Recommender Systems

Before we jump into the exciting new stuff, let's briefly look at how search and recommendation systems used to work.

Traditional search: Think of it as a sophisticated keyword matcher. When you typed in "red running shoes," the system primarily looked for web pages or product listings containing those exact words. It was fast and efficient for direct matches, but struggled with understanding context or nuance. If you misspelled "shose" or used a synonym, you might get fewer relevant results.

Recommender systems (the old way): These largely relied on two main approaches:

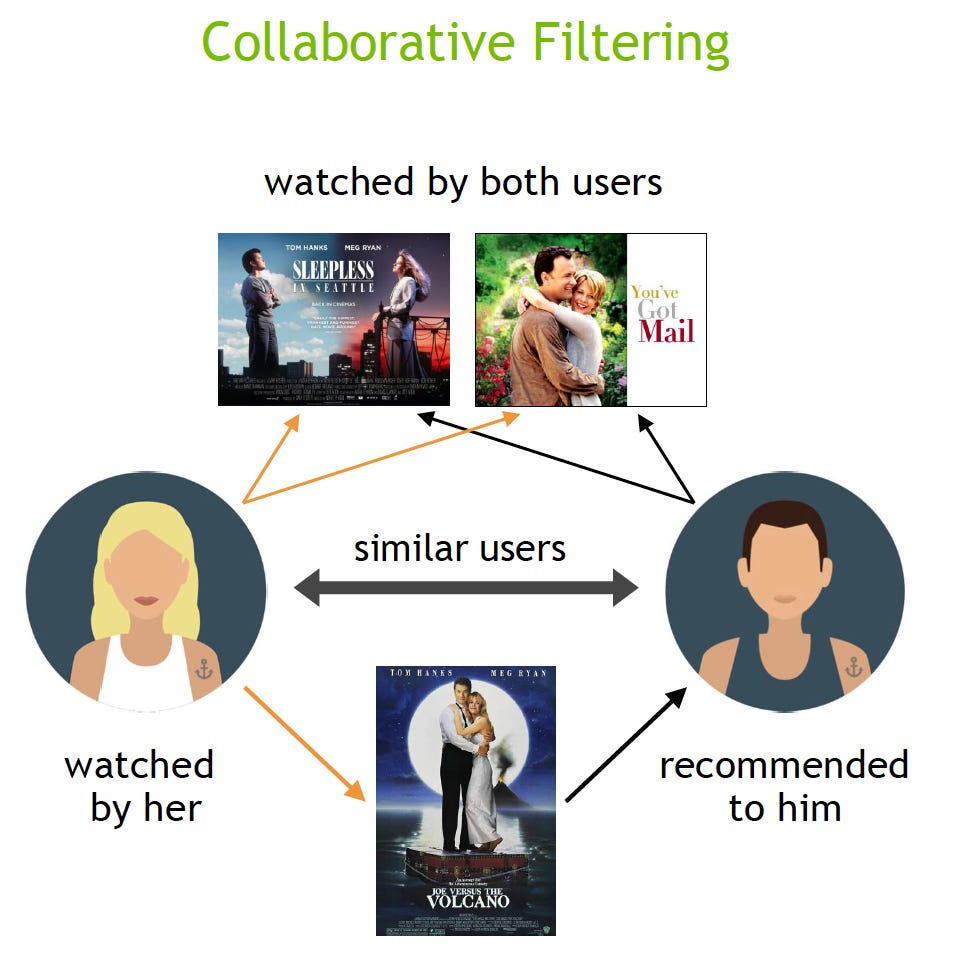

Collaborative filtering: "People who bought X also bought Y." If you liked a certain movie, it would suggest other movies liked by people with similar viewing histories.

Content-Based filtering: If you liked action movies, it would suggest more action movies based on genre tags. While useful, these systems often struggled with "cold start" (what to recommend to a new user with no history?) or being overly simplistic.

What LLMs Bring to Search and Recommendations

LLMs have processed vast amounts of text, learning to truly understand and generate human language. This means search isn't just about matching words anymore; it's about understanding your intent.

For instance, if you type, "dog-friendly hiking trails in the Colorado Rockies with moderate difficulty and scenic views in early October," an LLM doesn't just look for those exact words. It understands you want:

A trail suitable for your dog.

A moderate physical challenge.

Beautiful scenery.

Specific seasonal conditions in early October.

This allows search to combine information and give you direct, nuanced answers, not just a list of links. We're already seeing this with "AI Overviews" in Google Search, it's like having a super-smart research assistant at your fingertips.

Introduction to MultiModal AI

But what if your query isn't just text? What if you show something, or speak a question? That's where MultiModal AI comes in.

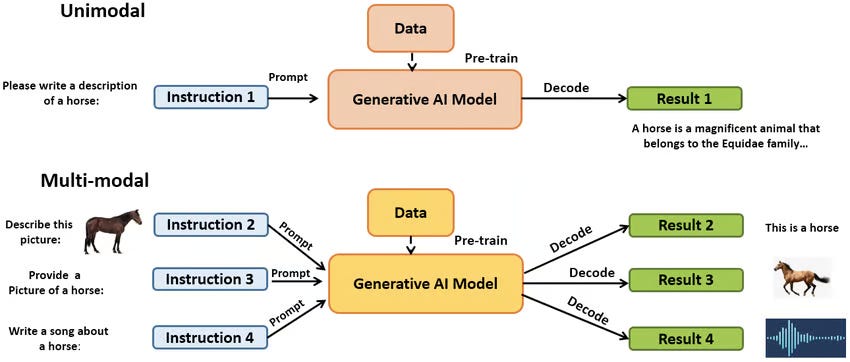

"MultiModal" simply means processing and understanding information from multiple modes or types of data like text, images, audio, and even video all at once. Imagine an AI that not only reads about something but also sees it, hears it, and feels its context. This allows for a much richer understanding of the world and our interactions with it.

Generative AI in Search - From Query to Dialogue

The real power of LLMs in search emerges when they move beyond just answering single queries. This is where Generative AI steps in, turning search into a more conversational experience. Instead of just a search bar, you're interacting with a smart assistant.

Think of it:

Multi-step questions: You can ask follow-up questions, clarify your needs, and refine your search as you go, just like talking to a human expert.

Summarized answers: The AI can combine information from various sources to give you a cohesive summary, rather than making you piece together information from multiple links.

Contextual understanding: It remembers previous turns in the conversation, building a better picture of what you're truly looking for. This moves search from a one-off query to an ongoing dialogue.

Next-Gen Recommendation Systems Powered by LLMs

Recommendations used to be pretty basic: "People who bought X also bought Y." Helpful, but limited. Now, with LLMs and MultiModal AI, things are getting incredibly rich and genuinely personal.

LLMs can understand the subtle nuances in product descriptions, user reviews, and even your past Browse patterns to make more relevant suggestions. They can explain why a recommendation was made, building trust and helping you discover things that truly resonate with your tastes.

Core Architectures: RAG, ReACT, Agents & Diffusion in Retrieval

How do these smart systems actually work under the hood? Here are a few key concepts:

RAG (Retrieval-Augmented Generation): This is a popular technique where an LLM doesn't just "make up" an answer. Instead, it retrieves relevant information from a vast database (like the entire internet or a company's internal documents) and then generates an answer based on that retrieved information. This helps keep the LLM's responses accurate and grounded in facts, reducing "hallucinations."

ReACT (Reasoning and Acting): This framework allows LLMs to not just generate text but also to reason about a problem and then act by using external tools. For example, an LLM could reason that it needs to find a flight, then use a flight booking API to get real-time information.

Agents: Building on ReACT, AI agents are systems that can pursue goals, plan multi-step actions, and utilize various tools (like search engines, calculators, databases) to accomplish complex tasks. They can decompose a big problem into smaller steps and execute them.

Diffusion in Retrieval: While traditionally associated with image generation (like DALL-E), diffusion models are also finding applications in information retrieval, particularly in generating better representations (embeddings) of queries and documents, making semantic search even more precise.

MultiModal Fusion in Understanding User Behavior

This is where MultiModal AI truly shines for recommendations. Instead of just suggesting another blue t-shirt because you bought one, MultiModal AI can:

Analyze the product images: Did your blue t-shirt have a specific abstract art print?

Understand your visual Browse habits: Do you consistently look at vibrant colors or minimalist designs, even across different product categories?

Read reviews: What specific features are people praising or complaining about, beyond just a star rating?

Process audio/video: If you're looking for music, it can analyze not just genre tags but the actual sound of the instruments, the mood of the lyrics, or even visual cues in music videos.

By combining all these data points, the system builds a much richer profile of your preferences, leading to recommendations that feel almost clairvoyant.

Evolution of User Interfaces

The way we interact with technology is evolving:

Beyond the search bar: While the classic search bar won't disappear, expect to see more interfaces where you can speak your query, upload an image, or even interact via AR/VR.

Conversational AI: Smart assistants (think Siri, Alexa, Google Assistant) are becoming much more capable, seamlessly integrating search and recommendations into natural conversations. You'll be able to ask complex questions and get tailored advice across various tasks.

Context-Aware interfaces: These systems will anticipate your needs based on your location, time of day, calendar, and past interactions, offering proactive help.

What Big Tech is Doing

You're already seeing this in action from major players:

Google: Their "AI Overviews" and advancements in conversational search (like with Gemini) are direct applications of LLMs. They also use MultiModal AI for image search and product discovery.

Microsoft (Bing Chat/Copilot): Integrating LLMs directly into their search engine and operating system, allowing for conversational search, content creation, and real-time information synthesis.

Amazon: Their product recommendation engines are constantly evolving, now leveraging MultiModal signals to suggest products based on visual similarities, sentiment from reviews, and deeper understanding of user intent.

Netflix/Spotify: Moving beyond simple genre matching to using AI to understand the emotional resonance of content (audio, visual, textual) to suggest shows and music you'll truly connect with.

Challenges & Trade-offs

While the future looks incredibly promising, there are important considerations:

Bias: LLMs and MultiModal AI learn from data. If that data contains biases, the AI can perpetuate or even amplify them in its search results and recommendations. Ensuring fairness and diversity in training data is crucial.

Explainability: Sometimes, these advanced models can feel like "black boxes." Understanding why a certain recommendation was made or how a search result was generated can be complex. Progress in "explainable AI" is essential for trust.

Privacy: As these systems get better at understanding our behavior, the amount of data they collect grows. Safeguarding user privacy and ensuring transparent data practices are paramount.

Computational cost: Running these large, complex models requires significant computing power, which has environmental and economic implications.

Hallucinations: While RAG helps, LLMs can still sometimes generate incorrect or nonsensical information. Continuous refinement is needed.

Conclusion:

The integration of LLMs and MultiModal AI marks a significant shift in how we interact with information and discover new things online. We're moving from a rigid, keyword-driven experience to one that is more intuitive, conversational, and deeply personalized. This evolution promises a digital world that understands our needs with greater accuracy, anticipating our desires and providing assistance in ways that feel increasingly natural. While the journey involves navigating challenges around ethics, bias, and privacy, the path ahead points to a future where AI acts as a sophisticated, helpful partner, making our online lives smoother, richer, and more engaging.

Until next time,

Stay curious, stay innovative and subscribe to us to get more such informative newsletters.

Read more of WTF in Tech newsletter: