If you've been following AI trends lately, you’ve probably heard the term “agentic systems” thrown around a lot. From OpenAI’s demo of agents doing your entire shopping process, to research labs proposing architectures with memory, planning, and tool use it’s clear we’re moving past the age of passive LLMs.

But let’s be honest: most diagrams of agentic systems either look like they’ve been designed by abstract artists or are just a mess of arrows and jargon. I’ve been there trying to decode a workflow chart with “controller,” “planner,” “executor,” “retriever,” and wondering who’s actually in charge here?

So in today's edition of Where’s The Future in Tech we are unpacking this. No buzzwords, no fluff, just a practical breakdown of how different agentic workflows are structured, when to use what, and what it means for the future of AI.

What Even Is an Agentic Workflow?

Let’s start with the basics. A workflow in this context is the step-by-step blueprint that determines how an AI agent thinks, plans, acts, and learns. It’s what transforms a static model like ChatGPT into a system that can plan a trip, write code, search the web, or even debug itself.

Think of it as choreography: the model is the dancer, but the workflow is the routine. Without it, even the most capable model doesn’t know what to do next.

Agentic workflows typically involve:

Memory (so the agent remembers past steps): This is the agent’s long-term brain. It remembers what it’s done so far, previous steps, decisions, or user inputs so it doesn’t lose context mid-task. Without memory, agents would be like goldfish with amnesia.

Planning (breaking big tasks into smaller ones): Planning is the agent’s ability to look at a big, messy task and break it down into manageable steps. Like a chef preparing ingredients before cooking, the agent maps out what needs to be done before jumping in.

Tool use (accessing APIs, search engines, databases): Agents aren’t all-knowing they rely on tools. Whether it’s calling APIs, querying a database, or googling something, tool use lets them extend their capabilities beyond what’s inside the model.

Reflection (checking its own output and revising): Reflection is the agent's self-review process. After completing a step, it pauses and asks: “Did I do this right?” If not, it revises and improves. Think of it as the built-in critic that keeps the agent honest.

Let's understand the architecture and working of individual agent types.

1. Simple Agent: One-Shot, No Memory

This is the most basic kind of agent you’ll come across, think of it like a vending machine. You give it input, it gives you output. That’s it. No memory, no learning, no thinking about what happened last time.

How it works:

The agent receives an input (like a text prompt, a sensor reading, or a button press).

It runs this input through a fixed set of rules or a direct model call.

Based on the match, it chooses and executes an action.

The process then resets for the next input. There's no memory of what just happened.

Where it works:

This is useful when you just want a quick, reliable response and don’t care about context like a calculator, command bot, or prompt-in → response-out system.

Key characteristic: Lack of internal state or memory. Its decision-making is entirely based on the current, instantaneous input. This makes it efficient for tasks where past history or future predictions are irrelevant, and immediate, consistent responses are required.

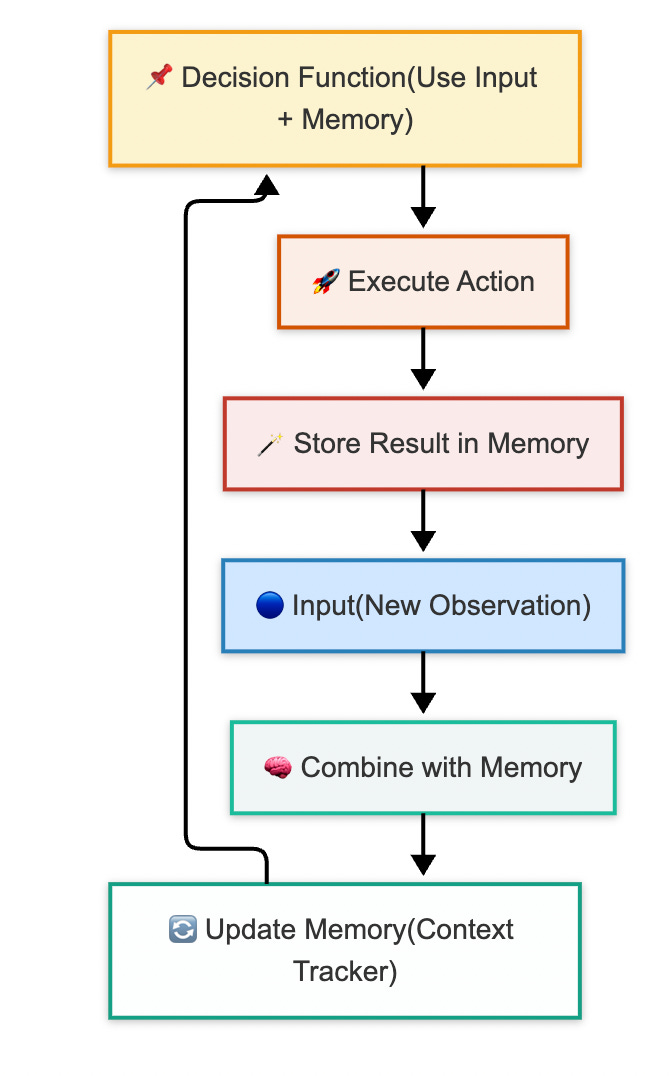

2. Agent with Memory: Context-Aware Decision Maker

Think of it like a vending machine suddenly remembers your last choice and uses it to make better suggestions. This next-level agent stores and updates a memory of what’s happened so far. That memory shapes its future actions.

How it works:

The agent gets a new input.

It combines this input with its internal memory (which stores past actions, states, or context).

This memory is updated continuously, so the agent can “understand” how the environment is evolving over time.

It then makes a decision using both the current input and what it remembers from before.

What's different?

Unlike the simple agent, it’s no longer responding blindly. It’s using history to add nuance just like how you respond differently in a conversation depending on what was said earlier.

Where it works:

This kind of agent is great for applications like chatbots that need to track ongoing conversations, or navigation systems that need to remember previous locations.

Key characteristic: Ability to maintain and leverage an internal representation of the environment, enabling context-aware and more intelligent decision-making over time. This allows for navigation, tracking, and understanding of dynamic environments.

3. Iterative Agent: Reflect, Retry, Refine

This one steps it up by adding self-evaluation and improvement. Think of it like a writer revising drafts or an artist refining a sketch. The Iterative Agent does something, looks at the result, and improves it over and over.

How it works:

It starts with a clearly defined goal that it’s trying to achieve.

It creates an initial plan or output.

It runs that plan, then evaluates how close the result came to the goal.

Based on the evaluation, it updates its approach, maybe fixing an error or optimizing a strategy.

It repeats this cycle until it’s satisfied or reaches a stopping condition.

The feedback loop:

At the core of this agent is the refinement loop doing, checking, learning, and doing again. That’s what enables smarter outputs over time.

Where it works:

This is ideal for content generation (e.g. writing code or emails), game playing, or optimization tasks like scheduling or layout design anywhere improvement over time leads to better results.

Key characteristic: Employs a continuous feedback loop that enables self-correction and progressive improvement over multiple attempts, making it suitable for tasks requiring optimization and high-quality output.

4. Hierarchical Agent: Structured Problem Solver

This is the project manager of agents. The Hierarchical Agent breaks a big problem into smaller chunks and delegates those chunks to specialized sub-agents each responsible for their own part of the job.

How it works:

A top-level agent receives a large goal.

It splits the goal into sub-goals and assigns them to different specialist agents (each expert in a specific domain).

These low-level agents work independently on their parts, some may even be memory-based or iterative themselves.

The top-level agent monitors progress, resolves conflicts, and stitches all the results back together into a complete solution.

Where it works:

This structure is ideal for solving complex, multi-step tasks like end-to-end product building, enterprise workflows, or multi-modal content creation (e.g. generate text, then images, then videos).

Key characteristic: Facilitates the tackling of highly complex problems by decomposing them into manageable parts and leveraging specialized expertise, offering a structured and scalable approach to agentic autonomy.

5. Collaborative Agent: Distributed Peer Teamwork

Now imagine a team of agents working together not in a top-down structure, but as equals. Each brings something unique to the table, and they collaborate, negotiate, and share knowledge to solve a problem.

How it works:

A shared problem is known across multiple agents.

Each agent independently identifies what it can contribute.

They communicate with one another, sharing data, requesting help, making suggestions.

A coordination layer helps them stay aligned, this could include negotiation rules, bidding systems, or shared memory spaces.

They each take action based on what they know, what others tell them, and what the team needs.

As tasks progress, they continue refining the solution together.

No central authority. Agents talk to each other and make decentralized decisions. It’s like a group of AI researchers working on different parts of a problem and brainstorming together.

Where it works:

Best for open-ended, collaborative workflows like creative brainstorming, decentralized control systems (robot swarms, IoT), or even multi-agent research simulations.

Key characteristic: Enables robust and flexible problem-solving for complex, distributed tasks by allowing multiple agents to leverage their individual capabilities and collectively achieve a goal, often exhibiting emergent intelligence. It thrives in environments where tasks can be parallelized or require diverse expertise without centralized control.

Conclusion

You might notice that these agent types aren't mutually exclusive. A sophisticated agentic workflow often combines several of these principles. For example, a hierarchical system might use iterative agents at lower levels, and those iterative agents might even have memory!

The beauty of agentic workflows lies in their modularity and the ability to combine these different agent types to build incredibly powerful and autonomous systems. We're truly moving into an era where AI isn't just a tool we use, but an intelligent entity that can proactively work for us, taking initiative and solving problems with increasing levels of sophistication.

That's all for this deep dive into the different kinds of AI agents! What types of agentic workflows do you find most intriguing? Share your thoughts!

Until next time,

Stay curious, stay innovative and subscribe to us to get more such informative newsletters.

Read more of WTF in Tech newsletter: