If you’ve been neck-deep in building with large language models lately, then you’ve likely heard two terms thrown around: AI Agents and MCP(Model Context Protocol).

They sound similar. They even overlap. But they’re solving very different problems.

In todays edition of Where’s The Future in Tech, I’m unpacking both: what they are, where they shine, and why understanding the difference matters as we build more persistent AI systems.

AI Agents: Think, Act, Repeat

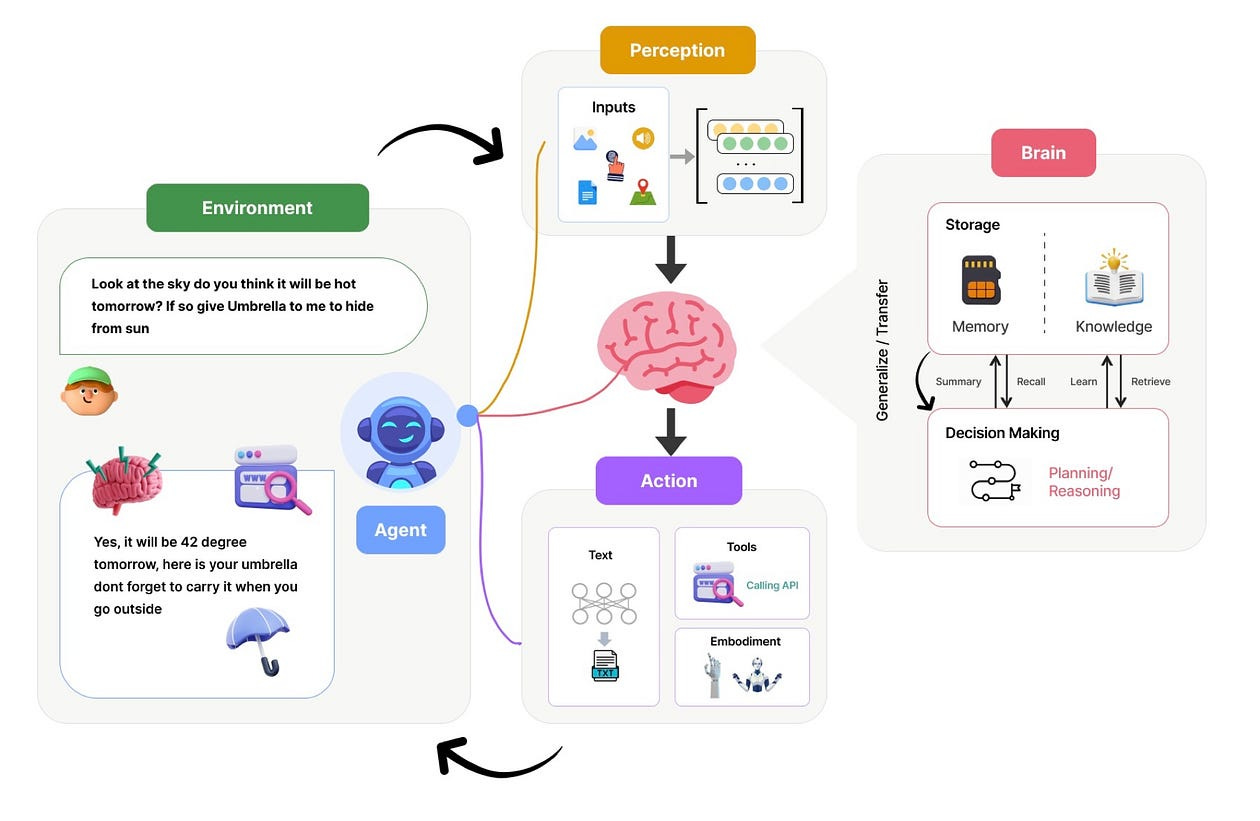

An AI Agent is a system that uses LLMs to think through goals and take actions, often in a loop. They're designed to:

Receive a task (“Book me a flight to Paris”),

Break it down (“Search flights → compare prices → book ticket”),

Execute each step (using tools, APIs, etc.),

And loop back as needed.

This loop is often built using the ReAct pattern (Reasoning + Acting). Think of it like:

Thought: I need to search for flights.

Action: call_search_API("flights to Paris")

Observation: Received flight options.

Thought: Let me choose the cheapest one.AI Agents are exciting because they simulate cognition multi-step thinking, reflection, retry logic, and planning.

Here's what makes them special:

Autonomy: This is key. Unlike a regular program that just follows a script, an AI agent can make its own decisions about how to reach its goal. It doesn't need you to tell it every single step. It's proactive and goal-oriented.

Reasoning & planning: It can break down a complex goal into smaller tasks, figure out the best sequence of actions, and even adjust its plan if things change. Think of it planning a multi-stop journey for you.

Memory & learning: Good AI agents remember what they've done, what worked, and what didn't. They learn from their interactions and improve over time. Picture a smart assistant who gets better at helping you the more you work together.

Tool use: This is where the magic happens. AI agents can "use tools" which, in the digital world, means integrating with other software, APIs, or databases to gather information or perform actions. For example, to plan that trip, an agent might use a flight booking tool, a hotel search engine, and a weather app, just like you would open different browser tabs.

But there’s a catch:

They forget easily.

Their “memory” is usually in the prompt, and context runs out fast.

Tool usage can get flaky unless orchestrated cleanly.

MCP: Think of it like Long-Term Memory with structure

This isn’t a framework, a package, or a chatbot tool. It’s a protocol, designed to structure long, evolving LLM conversations across multiple interactions, tools, and goals.

MCP was introduced to solve this problem:

LLMs can only handle so much context (even at 1M tokens). How do you maintain a coherent memory and reasoning flow across many tasks, tools, retries, and modules?

So what is MCP really? Here's the breakdown:

Standardized communication: Imagine you have a bunch of different tools (a calendar app, an email service, a database). Traditionally, an AI model would need specific, custom code to interact with each of these. MCP provides a common, standardized way for models to "talk" to any tool that supports the protocol. It's like a universal adapter, akin to USB-C for AI apps, as some have called it.

Exposing capabilities: MCP allows systems to "expose" their capabilities as "tools" to AI models. So, your calendar app can tell an AI, "Hey, I can create events, check your availability, and list your appointments," in a format the AI understands.

Tool discovery: An AI agent, using MCP, can dynamically discover what tools are available and how to use them, rather than having them hardcoded. This makes AI systems much more flexible and adaptable. Developers don't have to painstakingly integrate each new tool.

Context management: It helps the AI maintain context across different interactions and tool uses. This is crucial for multi-step tasks where the AI needs to remember previous information to make informed decisions.

It’s a structured way to:

Maintain persistent memory across tool calls and reasoning steps,

Pass around semantic context (what we’re doing and why),

Make tool-augmented agents smarter, not forgetful,

And orchestrate multiple agents, tools, and plans in a consistent way.

You can think of it like a protocol buffer for thoughts an agreed format for the agent and tools to communicate clearly, keep track of past steps, and not go blank mid-task.

Real-World Example: Building a Startup Advisor AI

Let’s say you’re building a system called FounderGPT, an AI advisor for early-stage startup founders. The goal? Help them take a vague startup idea and turn it into a detailed pitch deck with market analysis, roadmap, and technical feasibility report.

Sounds like a cool AI product, right? Now, let's look at two different implementations:

With just AI Agents:

You develop an agent that can:

Ask founders questions about their idea

Research competitors on the web

Generate a business model canvas

Suggest a tech stack

Create slide content

This agent uses ReAct-style prompting: it thinks, picks a tool, acts, sees what happens, and loops.

At first, this seems powerful. The agent searches Crunchbase, reads blog posts, summarizes trends, and starts generating the pitch deck.

But then problems creep in:

It forgets that the user said the startup is “B2B” two steps ago, and suggests a B2C go-to-market plan.

When generating the tech stack, it doesn’t remember the budget constraints shared earlier.

The final slides have inconsistencies Slide 2 says the TAM is $50B, Slide 6 says $200B.

The culprit? Context fragmentation. The agent’s memory is bound to the current prompt window, and once it switches tools or goes too deep in the reasoning loop, things get fuzzy.

Now, With MCP:

Instead of treating each step as a one-off LLM prompt, you structure the entire process using MCP:

Each step (market analysis, competitor research, roadmap planning, pitch generation) adds context to a shared memory.

When the user says “We’re building for mid-sized SaaS companies,” that fact is stored semantically and retrieved as relevant context for downstream tasks.

As the agent reasons about each part of the pitch, MCP ensures continuity: decisions made earlier (e.g., go-to-market strategy, pricing model) are tracked and enforced in later stages.

If the LLM chooses a tool (like a market size calculator), the input/output is wrapped and stored for the next reasoning step.

Now when Slide 6 is generated, the agent knows what Slide 2 said because MCP ensures the memory isn’t just a string of tokens, but a structured map of what has been said, decided, and done.

Result?

Fewer hallucinations

Higher consistency

Much better user trust

And here’s the kicker: now if the founder comes back a week later and says “We’re pivoting to enterprise instead of SMB,” the system can update the relevant decisions in memory and regenerate just the affected parts of the pitch.

You’re no longer just chatting with a smart assistant. You’re collaborating with an intelligent system that remembers, adapts, and evolves.

Why Does This Matter to You and Me?

This distinction might seem a bit technical, but the implications are pretty profound for the future of how we interact with technology.

More capable and Useful AI: This synergy means we're rapidly moving towards AI that can do more than just answer questions or generate text. We're talking about AI that can actively do things for us: automate complex workflows, manage intricate projects, and even act as a hyper-efficient personal assistant across all our digital tools. Imagine an AI booking your entire travel itinerary, from flights and hotels to local experiences, all based on a few simple prompts.

Faster development and innovation: For developers, MCP is a game-changer. It means less time spent on tedious "glue code" to connect different systems and more time building truly intelligent behaviors and applications. This accelerates the pace at which new and more powerful AI applications can be created and deployed.

Increased interoperability: Imagine your different AI tools (your calendar AI, your email AI, your task management AI) being able to seamlessly communicate and coordinate with each other because they all speak the "MCP language." This creates a truly integrated and intelligent digital ecosystem, breaking down the silos that often exist between different software.

Enhanced security and governance: While more autonomy can raise concerns, the standardization that MCP brings also offers opportunities for better security and governance. Because interactions are structured and defined by a protocol, it becomes easier to log, monitor, and control what AI agents are doing, ensuring they operate within defined boundaries and permissions. Every action can be transparently tracked.

So Where’s The Future in Tech?

Here’s my take:

Agents are the spark they show LLMs can do more than talk.

MCP is the scaffolding it helps LLMs think across time, memory, and action.

Together, they’re the architecture for the next wave of AI systems: research copilots, personal assistants, multi-day workflows, autonomous debugging agents, and beyond.

In a way, MCP is the invisible hand behind smarter agents. It's what will let them remember what you told them yesterday, track why they made a decision, and course-correct instead of just guessing. And in a few years, if your agent feels like an actual team member not just a parrot with tools it’s because someone added a little MCP magic behind the scenes.

Until next time,

Stay curious, stay innovative and subscribe to us to get more such informative newsletters.

Read more of WTF in Tech newsletter: