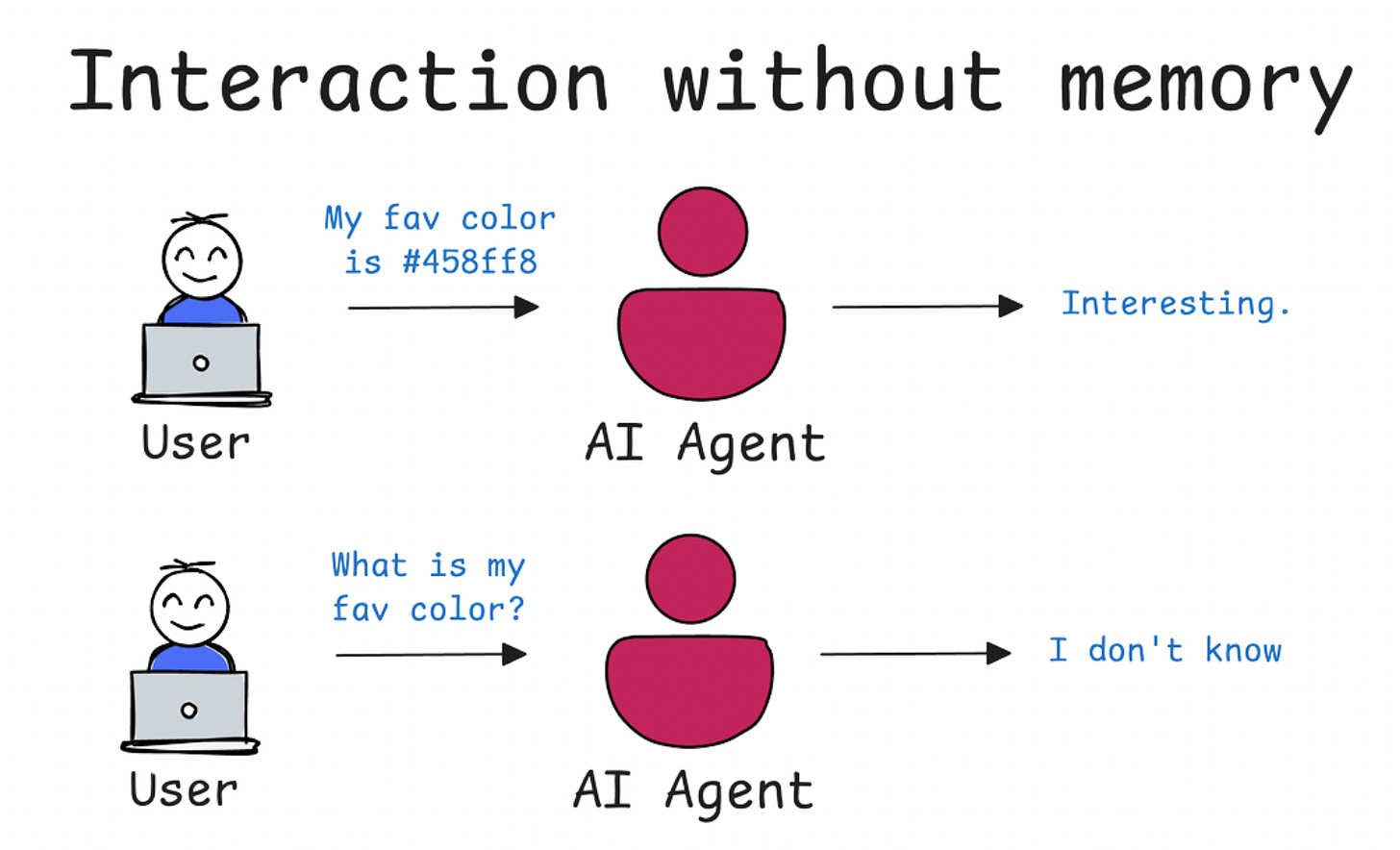

Ever felt like you’re having the same conversation with your AI assistant over and over again? You tell it something important, and five minutes later, it’s gone. For a long time, that’s been the reality of most AI. They were incredibly smart but had the memory of a goldfish.

But what if that's changing? Now AI companions can remember our conversations from last week, recall our preferences, and learn from our interactions over time. It’s one of the most exciting frontiers in artificial intelligence right now, and it’s what I want to dive into in todays edition of Where’s The Future in Tech : the fascinating world of AI memory. Let’s break down how AI is finally getting a memory of its own.

Why AI Needs a Memory

At its core, memory is what gives us context. It’s the thread that connects our past experiences to our present actions. For AI, it’s the bridge between being a clever tool and a truly intelligent partner. Without memory, an AI can't:

Personalize experiences: It can't remember that you prefer concise bullet points over long paragraphs or that you're a vegetarian when recommending recipes.

Learn from interactions: It can't get better at helping you because it starts fresh every single time.

Handle complex tasks: Imagine trying to write a report with an assistant who forgets the project's goal every time you give it a new piece of data.

Giving AI a memory is about making it more useful, personal, and, dare I say, more human-like in its ability to assist us.

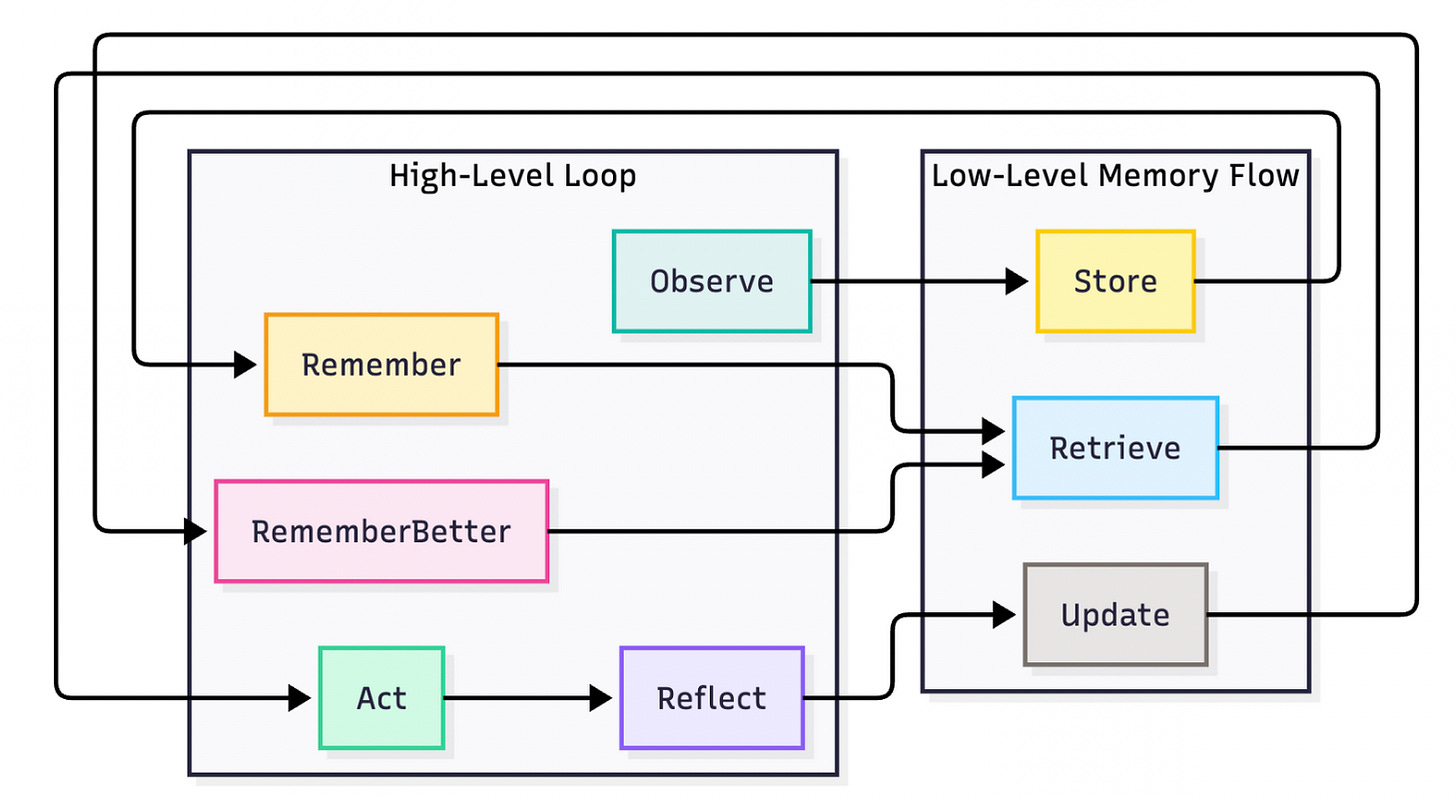

The Memory Loop

At its heart, think of it as a continuous cycle that allows the AI to perceive, act, and learn from its experiences. This entire process can be broken down into a powerful loop.

Observe: First, the agent perceives the task or user input. This is its "eyes and ears," taking in the current situation.

Remember: It then stores the immediate context and relevant history. This isn't just about logging words, it's about understanding the "what" and "why" of the moment.

Act: Armed with this context, the agent takes an action or makes a decision. This could be writing a line of code, answering a question, or using a specific tool.

Reflect: After the action, the agent evaluates what happened. Was the outcome a success or a failure? Did it move closer to the goal?

Update memory: Finally, and most crucially, it feeds these new learnings and insights back into its memory bank.

This loop of Observe → Remember → Act → Reflect → Update is what gives an agent the ability to improve on the fly, transforming each interaction into a lesson learned.

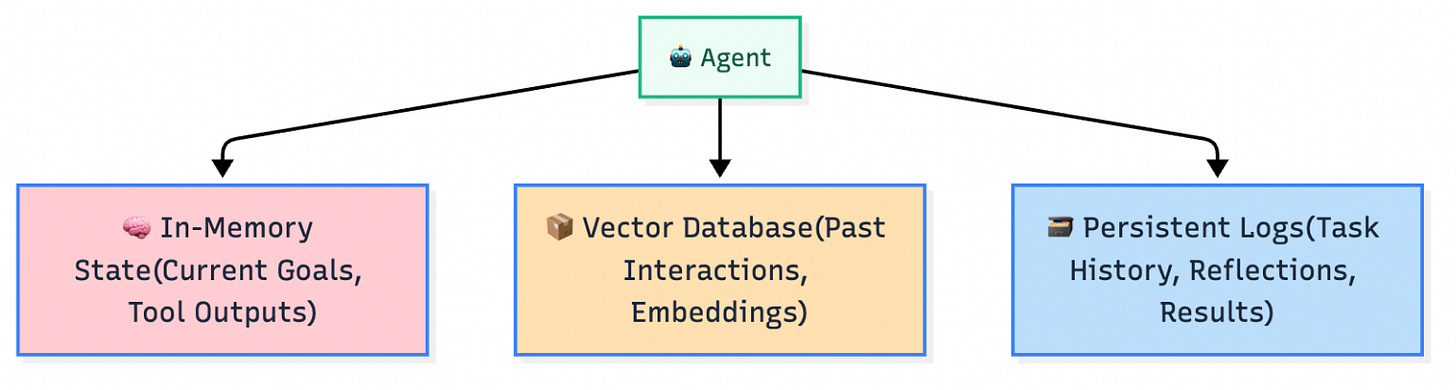

Where Is Memory Stored?

So where does all this information live? In agentic systems, memory is carefully organized across different storage layers, each with a specific job. Think of it like a highly organized workshop.

In-Memory state: This is the agent's short-term, temporary workspace. It holds the information needed for the current task at hand, like the immediate goals you've given it or the output from a tool it just used.

Persistent logs: This is where the agent keeps a long-term record of events, reflections, and task outcomes across different sessions. Like a detailed project notebook, it logs the history of what it did, how it did it, and what the final result was. This ensures that learnings from one task aren't lost before the next one begins.

Vector databases: This is where things get really futuristic. A vector database stores past interactions, not as simple text, but as embeddings rich, numerical representations of the data. An embedding captures the semantic meaning or vibe of a piece of information. This allows the agent to retrieve memories based on contextual similarity, not just keyword matches. It’s less like searching a document for a word and more like asking your brain to "find memories that feel like this one."

Finding the Right Memory

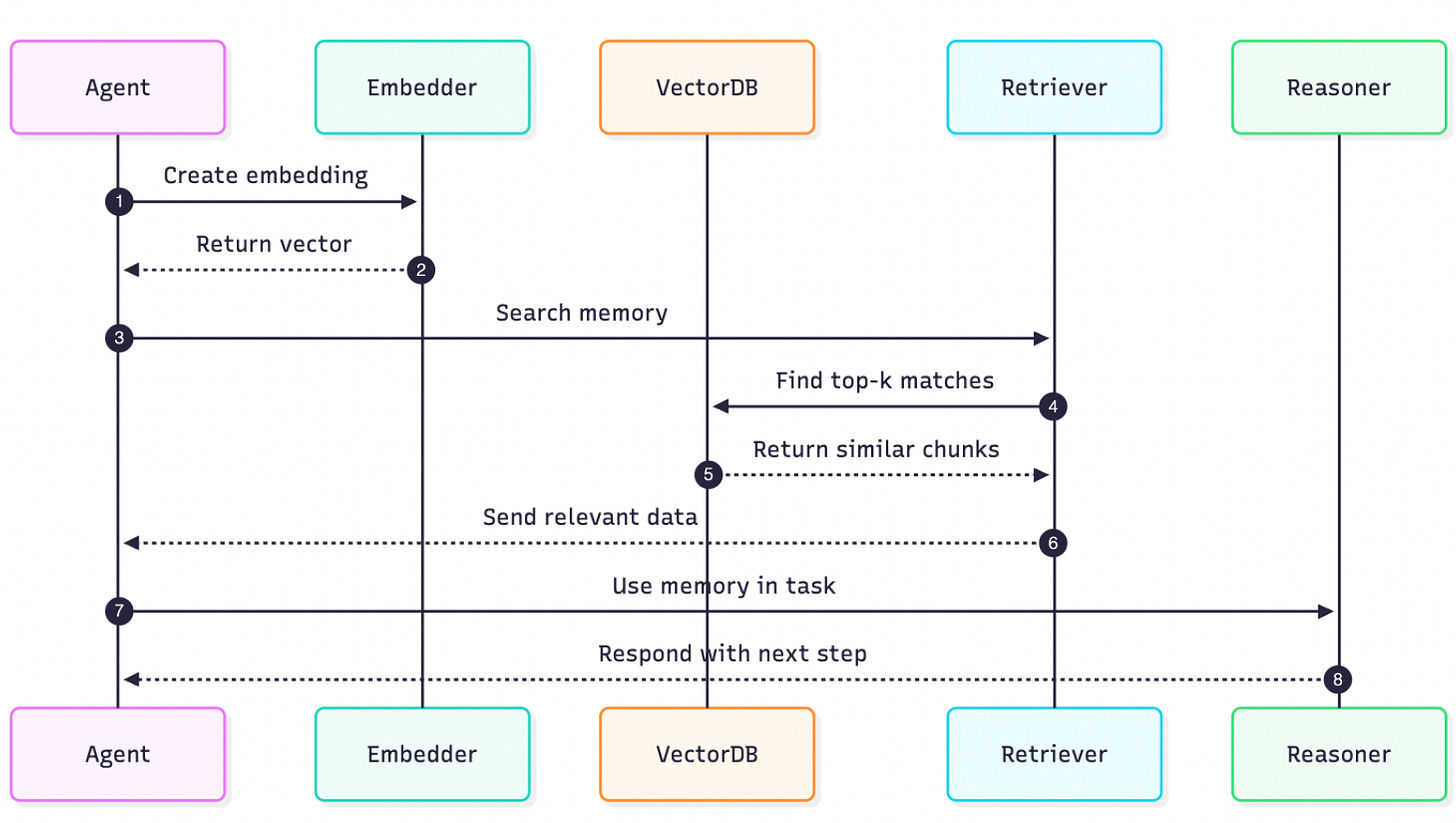

When you ask an agent a question, it doesn't painstakingly read through its entire life story. Instead, it performs an elegant, high-speed search to find the most relevant context. Here’s how that semantic search works under the hood:

Create an embedding: The agent takes your current task or question and asks an "Embedder" model to turn it into a numerical embedding.

Search memory: This new embedding is then sent to the vector database with a simple command: "find the memories most similar to this".

Find top matches: The database compares the query embedding to the stored memory embeddings and pulls back the most relevant ones the "top-k matches". These could be similar errors from the past, successful results from a related task, or previous user goals that provide context.

Use the memory: This relevant data is then sent back to the main agent, which uses it to inform its next step.

This entire process is fast, fuzzy, and context-aware. It’s built around a powerful philosophy: "find what's useful" rather than "remember everything".

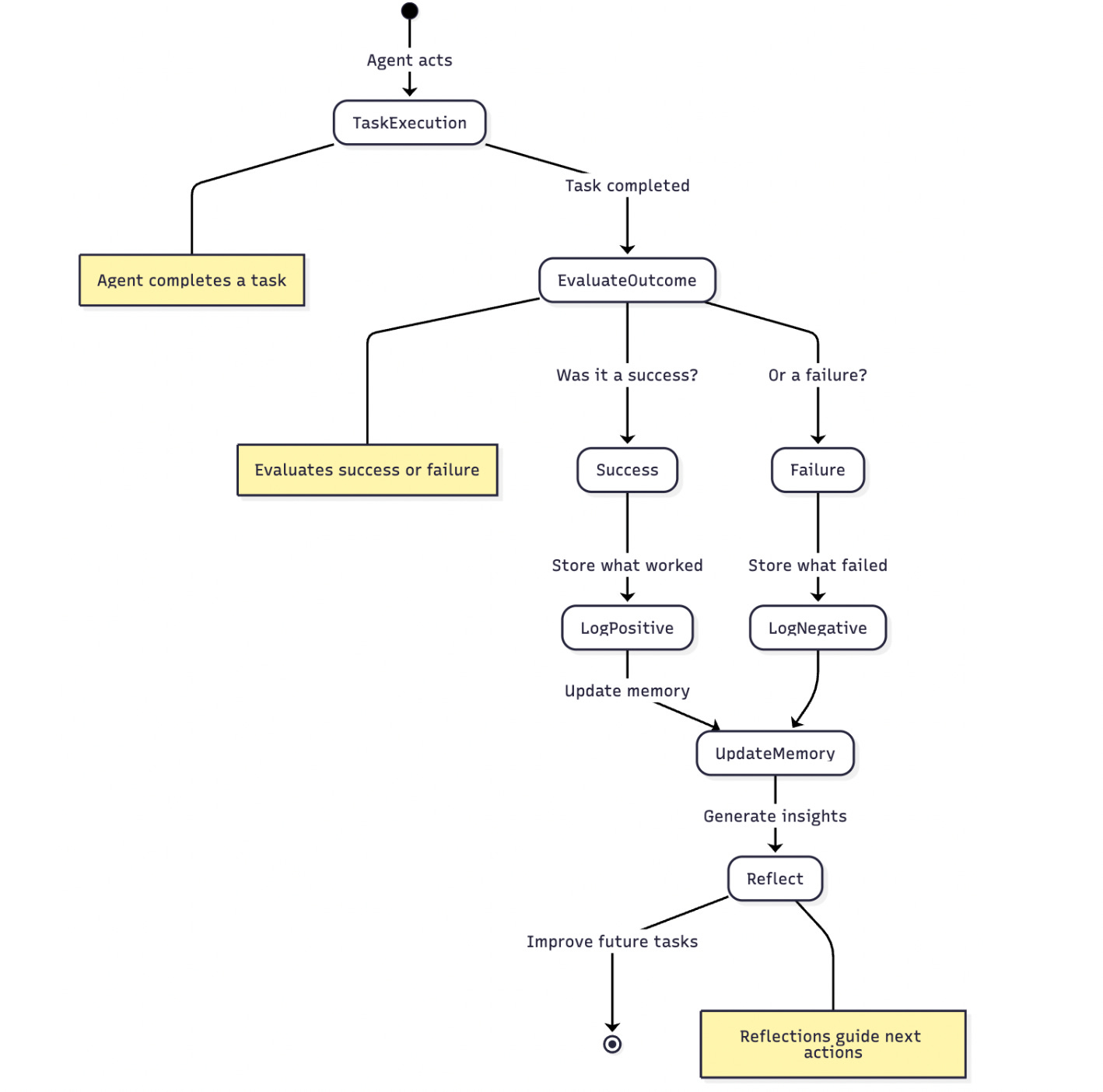

Reflection and True Learning

This is the step that separates a simple one-shot LLM from a truly agentic system. Once a task is done, the agent doesn't just move on. It stops and reflects. It performs a self-assessment, asking crucial questions:

What steps worked, and why?

Where did I struggle in this process

Based on the outcome (success or failure), should I try a different approach next time?

The answers to these questions the insights from the success or failure are then logged and stored back into memory. This reflection loop is what allows the agent to learn from its actions and ensures that its future decisions are guided by past experience

Types of Memory

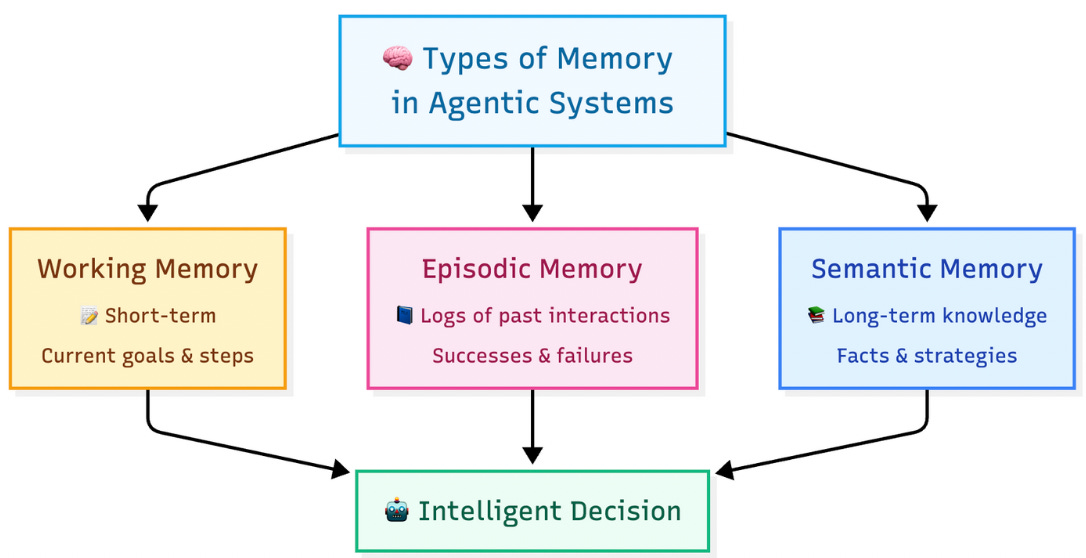

To make intelligent decisions, agents combine different types of memory, much like our own brains do.

Working memory: Think of this as the agent's mental sticky notes. It's short-term and holds the current instructions, goals, and steps for the task in progress.

Short-Term memory: This is the AI's ability to hold information temporarily to complete an immediate task. In modern AI like ChatGPT, this is often called the "context window."

What it is: It's a pre-defined space where the AI holds the current conversation. Every word you type and every word it generates stays within this window.

How it works: Think of it as the AI's RAM. It’s fast and essential for keeping the conversation coherent. The AI can "see" everything in the window to understand the context of your latest question.

The limitation: Once the conversation gets too long and exceeds the context window, the oldest parts get "forgotten" to make space for the new. This is why an AI might forget what you talked about at the beginning of a very long chat. It's temporary by design.

Episodic memory: This is the agent's task diary. It logs the history of past experiences specific successes, failures, and user interactions.

Semantic memory: This is the agent's long-term knowledge base, its internal encyclopedia. It stores general facts, patterns, and strategies that it has learned over time.

Long-Term memory: This is the holy grail. It’s where we give an AI a persistent "database" of information it can draw from forever. This is what allows an AI to remember you between conversations, days, or even months apart.

What it is: A way for the AI to store and, more importantly, retrieve key information from past interactions.

How it works: One of the most popular techniques right now is called Retrieval-Augmented Generation (RAG). It works a bit like this:

Storing memories: When you share an important fact (e.g., "My company's name is 'Innovate Next'"), the AI doesn't just "hear" it. It converts this information into a mathematical representation called a "vector" and stores it in a special kind of database, a Vector Database.

Recalling memories: Later, when you ask a relevant question (e.g., "What are some marketing ideas for my company?"), the AI first queries this database to find the most relevant stored memories.

Using memories: It then "augments" its prompt with this retrieved memory ("The user's company is 'Innovate Next'") before generating a response.

The fusion of what the agent is doing now (Working), what it has done before (Episodic), and what it knows in general (Semantic) is what leads to a truly intelligent decision.

The Future

This isn't just theory, it's being actively built today. Modern frameworks like LangChain, LangGraph, LlamaIndex, and CrewAI all come with built-in support for these memory systems, from simple buffers to complex, long-term retrievers and the technology is evolving at lightning speed. Take a look at emerging architectures like Mem0, which is built specifically for agents. It aims to make memory management automatic and relevance-informed, filtering what to remember and how to consolidate it, much like a human does.

We are finally building AIs that don't just process information, but build a relationship with it. An AI that remembers, learns, and improves is the difference between a clever tool and a true collaborator.

Until next time,

Stay curious, stay innovative and subscribe to us to get more such informative newsletters.

Read more of WTF in Tech newsletter: