10 GenAI/ML Questions to crack AI Interview in 2025

10 Real-World questions that actually test you and prepare for interviews in this evolving AI market.

So you’ve gone through the classics linear regression assumptions, types of gradient descent, softmax vs sigmoid. But guess what? Top-tier AI interviews won’t waste time on that. They’re gunning for one thing: can you think like a researcher, product owner, or applied ML engineer when things get messy?

Fr bro, my uno reverse question is why are you hiring?Why did the last person leave?

But jokes apart, in todays edition of Where’s The Future in Tech, I’ll walk you through 10 curated AI & GenAI interview questions, no fluff, no “what is overfitting” nonsense. Just real scenarios, deep thinking, and solutions that hold up under scrutiny.

1. Explain how you would evaluate the performance of a RAG pipeline.

What they're testing: Holistic RAG understanding, can you go beyond accuracy and talk about faithfulness, relevance, and retrieval quality?

Solution: Evaluating a RAG pipeline means you’re looking at two systems that need to be excellent on their own and work well together retrieval and generation. First, for the retriever, you need to assess whether it's bringing back the right documents in response to a query. This is where metrics like Precision@k, Recall@k, and Mean Reciprocal Rank (MRR) shine. They help determine how often relevant documents appear in the top-k results and how early those relevant hits show up.

But that’s only half the story.

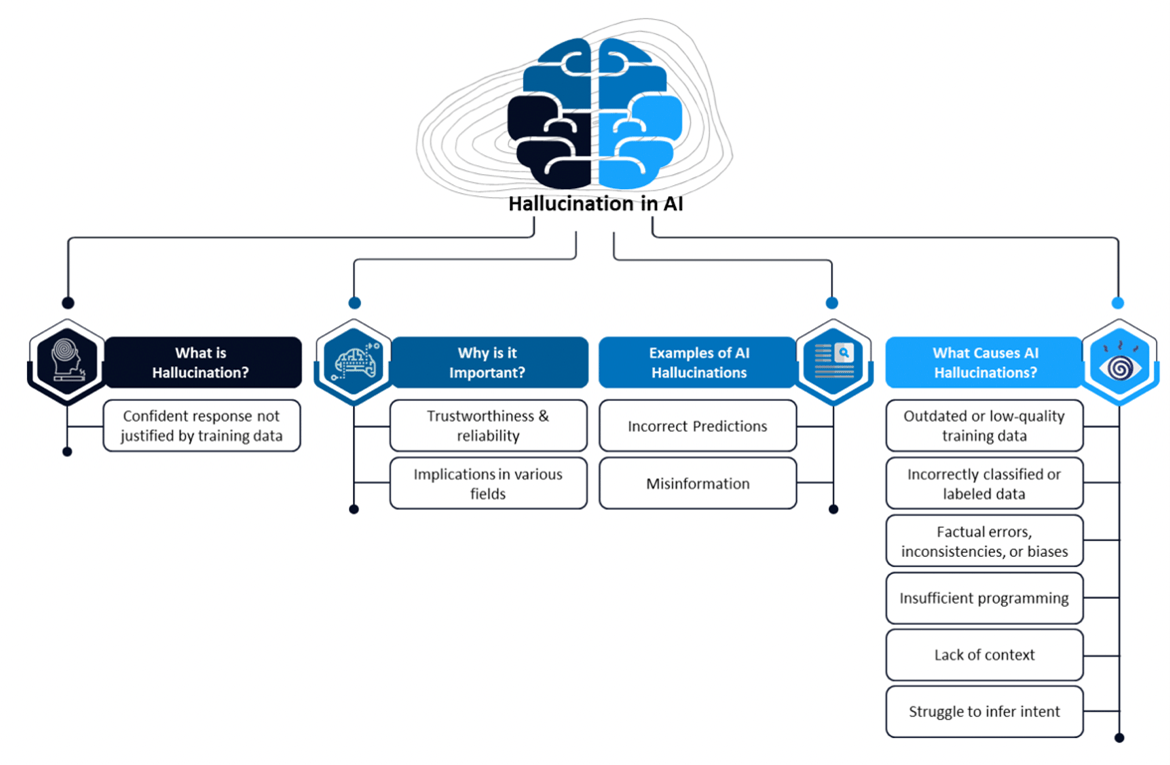

The generation component needs to be assessed on faithfulness does it hallucinate or stay grounded in retrieved data? Here, datasets like FEVER and TruthfulQA are excellent benchmarks. You’re also checking for relevance, which can be measured by lexical overlap between generated content and the original user query, or using semantic similarity scores.

Finally, combine these quantitative metrics with human evaluations and user feedback loops, which are crucial in understanding nuance- did the answer feel useful, trustworthy, and well-articulated?

2. You're asked to reduce hallucinations in a Generative QA system built using RAG. How would you approach it?

What they're testing: Deep grasp of faithfulness in generation and your practical ability to refine retrieval-generation workflows.

Solution: Reducing hallucinations in a RAG-based system involves controlling how grounded the generation is in retrieved documents. The first step is optimizing the retriever to ensure it's surfacing genuinely relevant passages this can be improved using dense retrievers like Contriever or ColBERT, fine-tuned on domain-specific data. Next, introduce filtering layers before the generator use rerankers or document classifiers to weed out low-quality retrieved content.

Then, on the generation side, apply constrained decoding techniques (like copy mechanisms or nucleus sampling with top-p limits) to prevent the model from inventing unsupported information. Integrating citations or source attribution mechanisms during generation also enforces traceability, nudging the model to stay anchored.

And finally, close the loop: implement feedback-aware training or use contrastive learning by penalizing outputs that diverge from retrieval context. Together, these measures shrink the gap between retrieval and generation, reducing hallucinations substantially.

3. A client wants to fine-tune a large language model on their proprietary dataset but has limited GPU availability. How would you proceed?

What they're testing: Practical understanding of parameter-efficient fine-tuning, trade-offs in resource-constrained settings.

Parameter Efficient Fine-Tuning

Solution: Full fine-tuning is off the table here it’s memory-heavy and computationally expensive. Instead, the best bet is using parameter-efficient fine-tuning (PEFT) methods. Start with LoRA (Low-Rank Adaptation), which trains a small subset of parameters and dramatically cuts down resource usage. If memory is severely constrained, shift to QLoRA, which combines LoRA with quantization (typically 4-bit), allowing fine-tuning on even consumer-grade GPUs.

Ensure you freeze the base model and only update injected adapter layers. Tools like Hugging Face’s PEFT library make this seamless. And remember monitor performance closely; if the model underperforms on downstream tasks, consider selectively unfreezing key transformer blocks.

4. Design a scalable retrieval system that can handle multi-lingual queries over billions of documents.

What they're testing: Retrieval system design at scale, cross-lingual representation handling, and architecture choices.

Diagram of BERT BASE and DistilBERT model architecture

Solution: Scalability and multilingualism are a tricky combo. You start by building a dense vector index using models like LaBSE, mBERT, or DistilmBERT-Multilingual, which encode semantic meaning across languages into a shared embedding space. Use FAISS or Weaviate for scalable vector indexing, sharded by document language or topic to optimize query time.

To maintain real-time performance, precompute and cache high-frequency query vectors. Add a language detection layer at inference to condition query embedding pipelines. Also, consider reranking retrieved passages using a multilingual cross-encoder for higher precision.

Finally, logs from user interaction should loop back for continual retriever improvement using contrastive learning with hard negatives in multiple languages.

5. How would you evaluate whether a GenAI model trained on legal documents is giving accurate, trustworthy outputs?

What they're testing: Domain-specific evaluation mindset, legal QA faithfulness, and risk-awareness.

Bert Score Computation: Step by Step process

Solution: You don’t just want accuracy you want legally sound outputs. Start with automatic metrics like BLEU, ROUGE, or BERTScore, but understand they only scratch the surface. For a legal setting, prioritize faithfulness and justifiability. Use custom evaluation sets that contain factual traps or adversarial phrasing to test whether the model is grounded.

Implement human-in-the-loop reviews by legal professionals. Build a feedback loop into your system where legal experts can flag ambiguous or incorrect generations, and use that data to further finetune or align the model.

You might also integrate citation validation during generation was the case law or statute cited actually present in the retrieved content? If not, it’s hallucination dressed in legalese.

6. A fraud detection system trained on past financial transactions suddenly drops in accuracy. How would you debug this?

What they're testing: Model monitoring, concept drift detection, real-world deployment sensibility.

Solution: First, don’t blame the model check the data. A sudden drop often means concept drift the statistical properties of the input data have changed. Confirm this by comparing feature distributions from the training data to live traffic using tools like Kolmogorov–Smirnov tests or Population Stability Index (PSI).

If drift is confirmed, retraining might be needed. But before jumping in, inspect whether new fraud patterns are simply not represented in the training set. In that case, label recent data and use it in an incremental training setup.

Also examine your pipeline: feature generation code, input APIs, and even upstream data sources. Sometimes the model is fine, but the data isn’t what you think it is.

7. How would you optimize latency for a GenAI-powered chatbot expected to handle 1000+ concurrent users?

What they're testing: Model serving efficiency, batching, and throughput trade-offs.

Solution: First, run inference on a GPU-backed setup using optimized transformer libraries like vLLM or Triton. These support continuous batching, allowing you to serve multiple user queries in a single forward pass.

If you’re not already using it, switch to quantized models they significantly reduce compute time. For backend infra, use asynchronous message queues and scale horizontally using Kubernetes with autoscaling enabled.

Also consider caching outputs for frequently asked queries (e.g., "What is your refund policy?") and using early exit decoding or smaller distilled models for first response while the full model finishes in the background.

8. You're given a retrieval system that’s returning irrelevant documents in response to niche biomedical queries. What would you do?

What they're testing: Domain-specific retrieval refinement, understanding of embeddings and training data.

Representation of a Cross-Encoder model

Solution: The irrelevance likely stems from generic embeddings. Biomedical queries need specialized understanding, so start by swapping the base model for something like BioBERT or SciBERT, which are pre-trained on domain corpora.

Fine-tune the retriever on in-domain query-document pairs this helps align its semantic space with biomedical language. Incorporate hard negatives (similar-looking but wrong docs) during training to sharpen contrastive learning.

Finally, implement reranking with a cross-encoder fine-tuned on biomedical QA to boost top-k precision. That way, even if your initial retrieval is noisy, your top results stay highly relevant.

9. Design a pipeline for continuously improving a deployed GenAI customer support model.

What they're testing: MLOps mindset, feedback loops, continuous learning, and safe deployment.

Solution: The pipeline begins with real-world feedback. Capture every customer interaction, and tag those rated poorly or escalated to human agents. Use these as fine-tuning data, either to reinforce good behaviors or mitigate failure cases.

Implement a human-in-the-loop validation system where flagged generations are reviewed and corrected, feeding these corrections into a weekly or monthly update cycle. Use RLHF (Reinforcement Learning from Human Feedback) if appropriate, especially to align tone and politeness.

Finally, set up monitoring dashboards to track latency, hallucination frequency, and user satisfaction. If any dip, trigger retraining jobs or rollout rollback logic to revert to stable models.

10. How would you handle evaluation for a multi-modal GenAI model that takes image and text as input and generates captions?

What they're testing: Multi-modal evaluation knowledge, blending NLP and vision metrics.

Solution: Start by evaluating the textual outputs with standard metrics like BLEU, METEOR, and CIDEr. But don’t stop there these only assess fluency and surface-level correctness. For deeper semantic relevance, use SPICE (which looks at scene graph similarity) or CLIPScore, which measures alignment between image and caption using embeddings.

To capture edge cases (e.g., sarcasm, negation), include human evaluators who score the outputs on relevance, creativity, and tone. In some cases, train classifiers to detect hallucinated elements say, if the caption mentions a dog when none is in the image.

Also consider image-question pairs as inputs and assess via Visual Question Answering (VQA) metrics if your model supports interactive queries over visuals.

Until next time,

Stay curious, stay innovative and subscribe to us to get more such informative newsletters

Very useful practical tips. Thank you

These questions actually made me think!